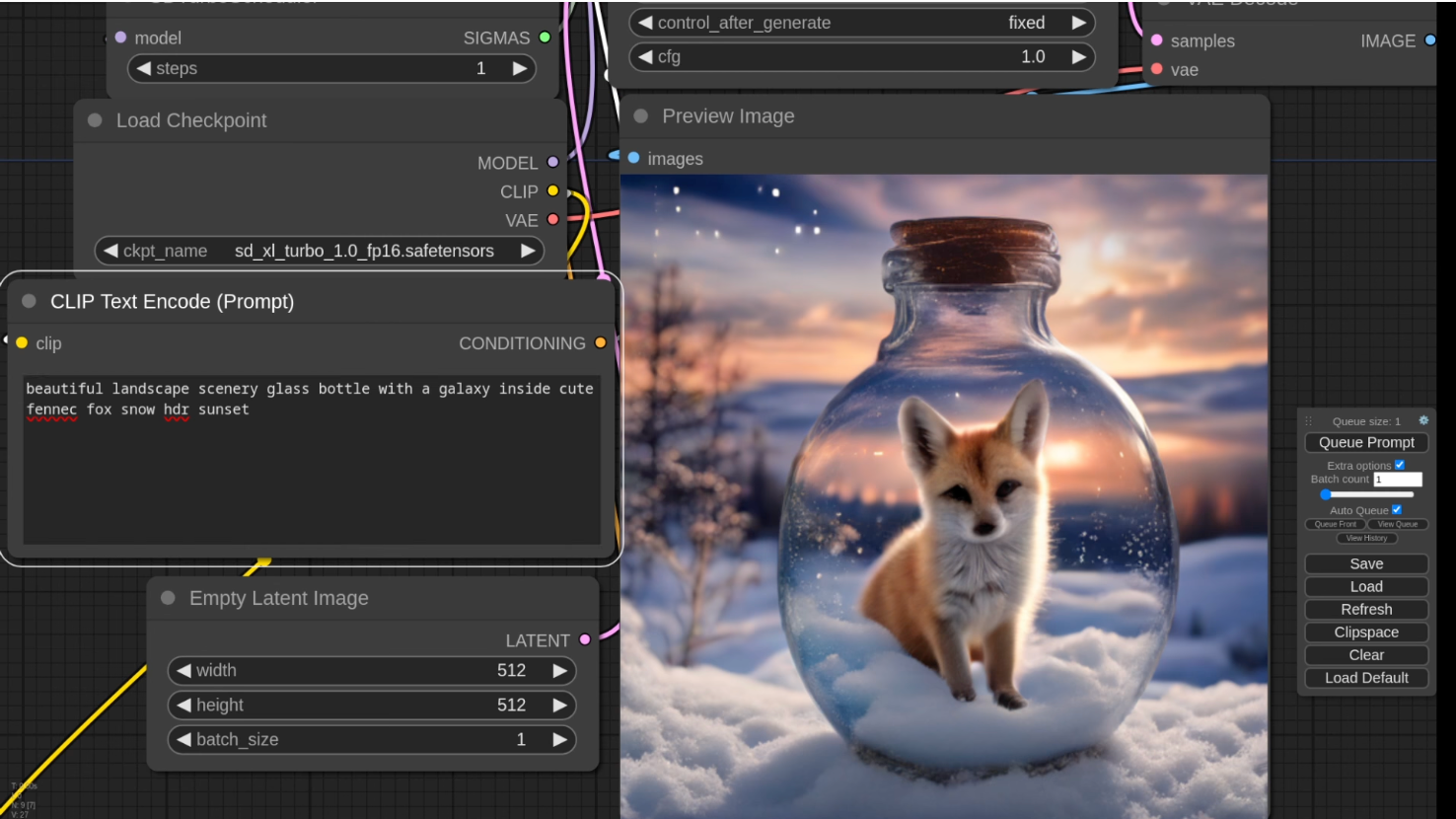

SDXL Support

You can find the workflow for live prompting with SDXL Turbo on the SDXL Turbo examples page. If SDXL is too slow for your system, an SD2.1 Turbo model is also available and can be used with the same workflow.

Frontend Improvements

- Group Nodes: You can now select multiple nodes, right-click, and choose “Convert to Group Node” to combine them into a single node.

- Undo/Redo: You can now use CTRL-Z to undo and CTRL-Y to redo actions.

- Reroute Nodes: Reroute nodes are now compatible with Primitive Nodes.

Stable Zero123

Stable Zero123 is an SD1.x model designed to generate different views of an object. By providing a photograph with a simple background, the model can create images of the object from various angles. While it’s typically used for generating 3D models through photogrammetry, in ComfyUI only the diffusion model is implemented.

Examples on how to use it are available on the examples page.

FP8 Support

PyTorch supports two FP8 formats: e4m3fn and e5m2. Use FP8 only if you’re running out of memory, as it comes with a slight performance hit and lower image quality.

- –fp8_e4m3fn-unet or –fp8_e5m2-unet: Store the model (unet) weights in memory using FP8.

- –fp8_e4m3fn-text-enc or –fp8_e5m2-text-enc: Store the CLIP (text encoder) weights in FP8.

To enable both CLIP and MODEL weights in FP8 e4m3fn, launch ComfyUI with the following:

python main.py --fp8_e4m3fn-text-enc --fp8_e4m3fn-unetFor the standalone Windows package, copy run_nvidia_gpu.bat to run_nvidia_fp8.bat, edit it with Notepad, add the necessary arguments, and use that file to run ComfyUI.

Python 3.12 and PyTorch Nightly 2.3

For maximum performance, the standalone package with Nightly PyTorch for Windows has been updated to Python 3.12 and PyTorch Nightly 2.3. This can be found on the releases page. The regular standalone version uses Python 3.11 and PyTorch 2.1.2.

Self Attention Guidance

The _for_testing->SelfAttentionGuidance node sharpens images and improves consistency but slows down the generation process.

PerpNeg

The _for_testing->PerpNeg node enhances consistency by making the negative prompt more precise. To use it effectively, connect “empty_conditioning” to an empty CLIP Text Encode node.

Segmind Vega Model

To use the Segmind Vega Model, download the Segmind Vega checkpoint and place it in the ComfyUI/models/checkpoints/ folder. You can use it like a regular SDXL checkpoint.

The Segmind Vega LCM Lora is also supported. Download it from the official repo, rename it, and place it in the ComfyUI/models/loras/ folder. You can then use the LCM Lora example with the Segmind Vega LCM Lora and the Segmind Vega checkpoint. Lower the CFG to 1.2 for optimal results.

Other Updates

- SaveAnimatedPNG: A new node for saving animated PNGs, a format that works well with FFmpeg, unlike animated WEBP.

- –deterministic: Enables PyTorch to use slower, deterministic algorithms that may help reproduce images consistently.

- –gpu-only: Stores intermediate values in GPU memory instead of CPU memory.

- GLora Files: Now supported for use in ComfyUI.

Read over articles in our Blog: