What is ComfyUI ControlNet?

ControlNet is a powerful technology that enhances text-to-image diffusion models by providing precise spatial control during image generation. Integrating seamlessly with large-scale pre-trained models like Stable Diffusion, ControlNet leverages the knowledge from these models—trained on billions of images—to introduce spatial conditions such as edges, poses, depth, and segmentation maps. These spatial inputs allow users to guide image creation in ways that go beyond traditional text-based prompts.

The Technical Mechanics of ControlNet

ControlNet’s innovation lies in its unique approach. It locks the parameters of the original model, preserving the learned capabilities, and then trains additional layers using “zero convolutions.” These layers, which start with zero weights, introduce spatial conditions into the model without disrupting its initial learning. This method enables ControlNet to integrate new learning pathways while maintaining the integrity of the pre-trained model.

How to Use ControlNet in ComfyUI: Basic Steps

Traditionally, diffusion models rely on text prompts to generate images. ControlNet adds another layer of conditioning, enhancing the ability to direct the generated image using both textual and visual inputs.

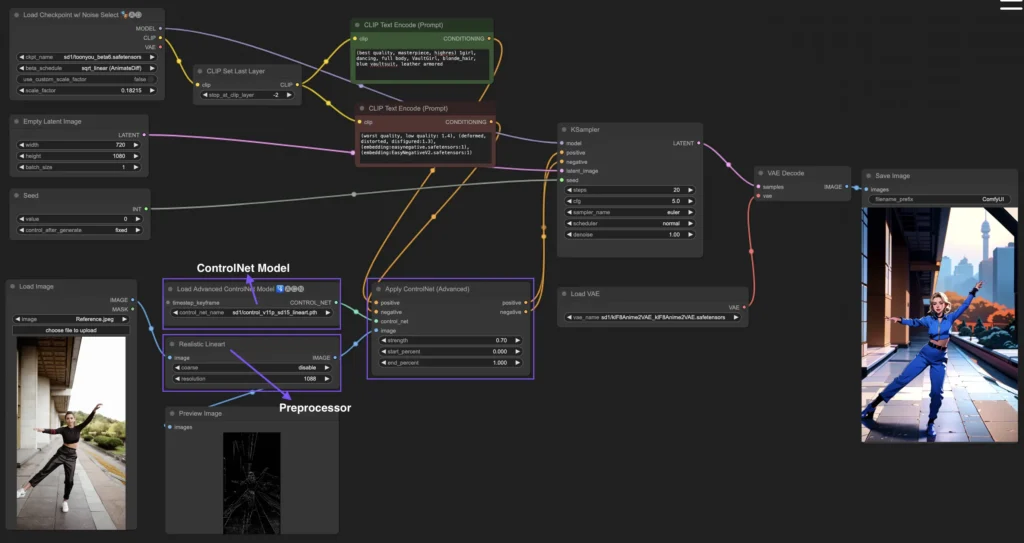

Loading the “Apply ControlNet” Node in ComfyUI

This step integrates ControlNet into your ComfyUI workflow, allowing you to apply visual guidance alongside text prompts.

Inputs of the “Apply ControlNet” Node

- Positive and Negative Conditioning: These inputs guide the generated output, determining what to include and avoid. They connect to “Positive prompt” and “Negative prompt” respectively.

- ControlNet Model: Link this to the “Load ControlNet Model” node, enabling the selection of a ControlNet or T2IAdaptor model, which provides specific visual guidance based on the model’s training. While ControlNet models are primarily discussed, T2IAdaptor models can also be used for similar purposes.

- Preprocessor: Connect the “image” input to a “ControlNet Preprocessor” node, ensuring the image is modified appropriately for the chosen ControlNet model. This guarantees the input is optimized for ControlNet’s conditions.

Outputs of the “Apply ControlNet” Node

The node produces two outputs: Positive and Negative Conditioning. These outputs guide the behavior of the diffusion model in ComfyUI. Afterward, users can either proceed to KSampler for further refining the image or layer additional ControlNets to achieve more detailed control.

Parameters for Fine-Tuning the “Apply ControlNet” Node

- Strength: This parameter controls how strongly ControlNet influences the image. A value of 1.0 applies full strength, while 0.0 disables it.

- Start Percent: Defines when, during the diffusion process, ControlNet begins influencing the image generation. For example, setting this to 20% means ControlNet starts impacting the process at 20% completion.

- End Percent: Specifies when ControlNet stops influencing the process, e.g., an end percent of 80% means its effect ends when 80% of the diffusion process is completed.

ControlNet opens up new possibilities for refined and visually guided image generation, offering creators the tools to produce even more precise and customized visuals.

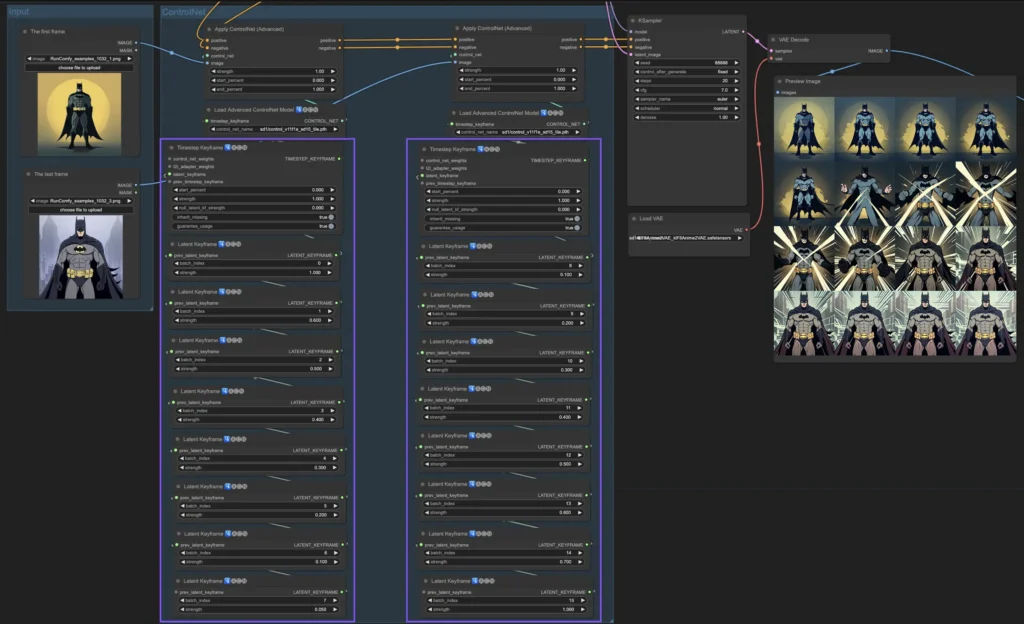

How to Use ComfyUI ControlNet: Advanced Features – Timestep Keyframes

Timestep Keyframes in ControlNet provide advanced control over AI-generated content, especially useful in scenarios like animations or evolving visuals where timing and progression are critical. Below is a detailed explanation of the key parameters, helping you utilize these features effectively:

- prev_timestep_kf

This parameter acts like a link to the previous keyframe, ensuring smooth transitions between phases. By connecting keyframes, you guide the AI through the generation process, creating a logical progression from one phase to the next, much like a storyboard that unfolds step by step. - cn_weights

These weights allow fine-tuning of specific aspects of ControlNet during different phases of the generation. By adjusting these, you can emphasize or reduce the influence of certain features at different times, helping to control the image evolution precisely. - latent_keyframe

This parameter adjusts how strongly different parts of the AI model affect the final output during specific phases. For instance, if you want the foreground to become more detailed over time, you can increase the latent_keyframe strength for foreground elements in later keyframes. Similarly, reduce strength for elements that should fade into the background, offering dynamic control over evolving visuals. - mask_optional

Attention masks act as spotlights, directing ControlNet’s focus on certain areas of the image. Whether highlighting a character or emphasizing background elements, masks can apply ControlNet’s influence variably, helping you control the AI’s attention and ensuring key elements are rendered as intended. - start_percent

This marks when a keyframe comes into play, measured as a percentage of the overall generation process. Similar to an actor’s entrance on stage, start_percent ensures that each keyframe begins influencing the generation at the exact right time. - strength

Strength provides overall control over how much influence ControlNet exerts during that phase of the generation. Adjusting this helps you fine-tune the intensity of the keyframe’s effect on the output. - null_latent_kf_strength

This parameter provides guidance for any aspects (latents) not explicitly directed within the current keyframe. It ensures that even those features not specifically addressed still behave in a coherent manner, maintaining the quality and flow of the output. - inherit_missing

When this is activated, the current keyframe inherits any unspecified settings from the previous keyframe. It helps maintain continuity, ensuring you don’t need to manually replicate every detail, making the process more efficient. - guarantee_usage

This guarantees that the current keyframe will have its moment of influence in the generation process, even if it’s only brief. It ensures that every keyframe you’ve set up has an impact, ensuring the narrative or visual progression you’ve planned is fully realized.

Timestep Keyframes are a powerful tool for orchestrating the progression of an image or animation in ComfyUI. They allow creators to carefully manage the evolution of visuals, offering seamless transitions from one frame to the next, and ensuring the final output aligns perfectly with their artistic vision.

Various ControlNet/T2IAdaptor Models: Detailed Overview

ControlNet models offer powerful tools for precise image generation, focusing on human poses, facial details, and hand gestures. The T2IAdaptor models, while similar, bring additional nuances to the process. Here’s a breakdown of popular ControlNet models, along with a mention of notable T2IAdaptors.

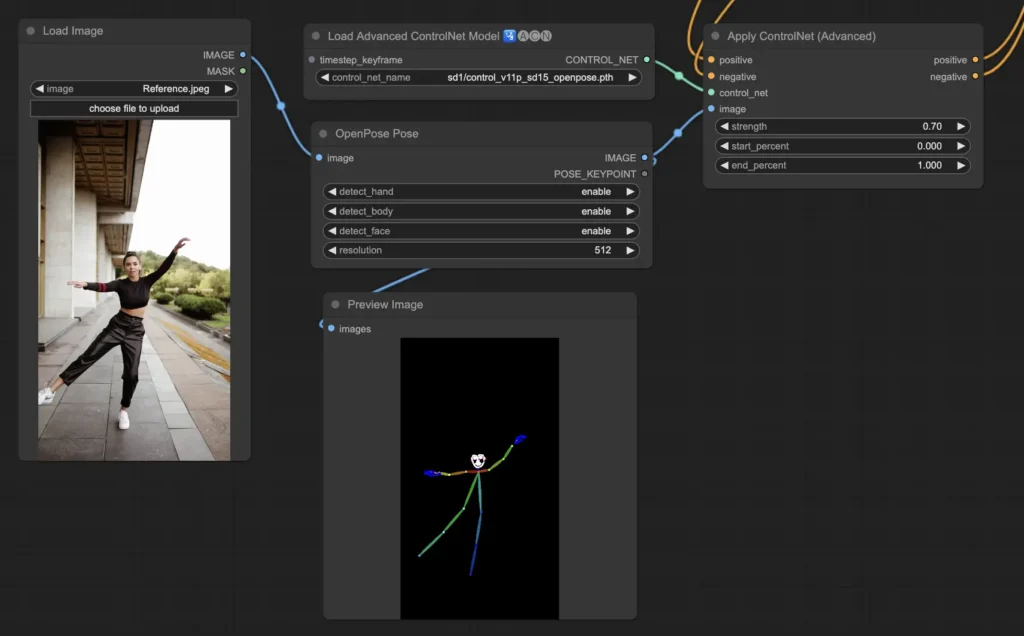

ComfyUI ControlNet Openpose Models

- Openpose (Body)

The core ControlNet model for human pose replication. It captures fundamental body keypoints like eyes, nose, neck, shoulders, elbows, wrists, knees, and ankles. Ideal for basic pose generation, it ensures the correct positioning of a subject’s body in an image. - Openpose_face

This model builds upon the basic OpenPose by incorporating facial keypoints. It allows for more precise replication of facial features and expressions, making it crucial for projects where facial details are important. - Openpose_hand

An extension of OpenPose designed to detect the intricate keypoints of hands and fingers. It excels in reproducing complex hand gestures and positioning, enhancing the depth of body and facial poses. - Openpose_faceonly

A specialized version focusing solely on facial details. It omits body keypoints and provides detailed facial expression and orientation replication, making it highly useful for portrait projects that emphasize facial features. - Openpose_full

A comprehensive combination of the OpenPose, OpenPose_face, and OpenPose_hand models. This model covers the entire body, face, and hands, providing full pose replication for complex scenes that involve detailed human figures. - DW_Openpose_full

An advanced version of Openpose_full with additional refinements. DW_Openpose_full offers enhanced accuracy in pose detection, making it the pinnacle of pose replication within the ControlNet suite. - Preprocessor

Both Openpose and DWpose preprocessors are essential to adapt and optimize images for the corresponding ControlNet models. They ensure the images are correctly aligned and formatted before being passed into the ControlNet process for pose generation.

These models offer a wide range of applications from basic pose replication to detailed facial and hand gesturing, making them essential for projects that require high-precision human representation in generated images.

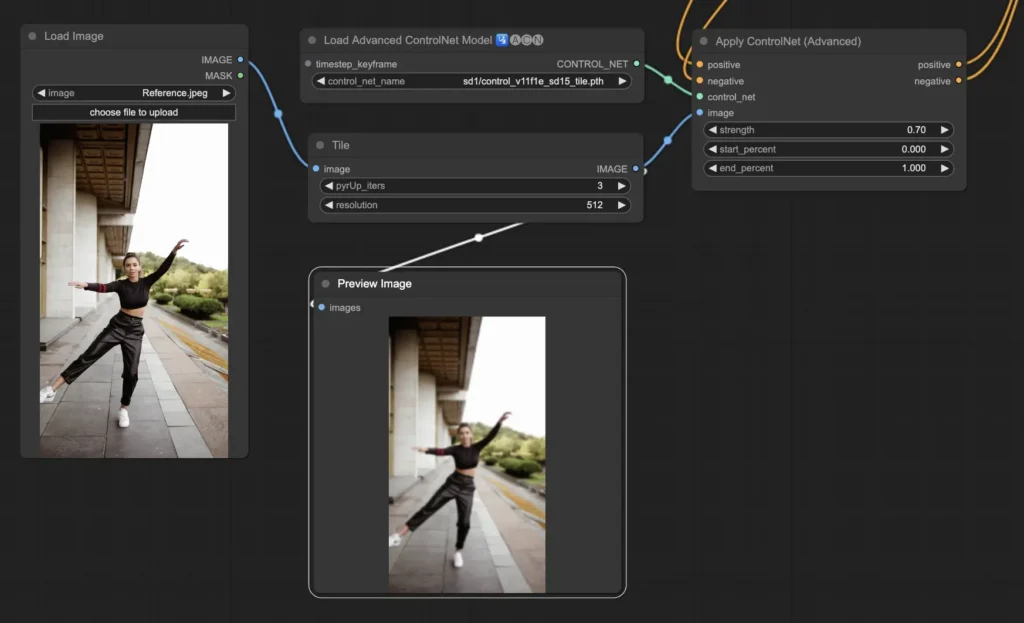

ComfyUI ControlNet Tile

The Tile Resample model is designed for enhancing fine details in images, particularly when used alongside an upscaler. Its primary function is to increase the image resolution while sharpening and enriching textures, making it ideal for adding intricacy to surfaces and elements within an image. By utilizing this model, you can significantly improve the clarity and visual quality of your outputs, especially when scaling up images for larger formats or higher-definition displays.

Preprocessor: Tile

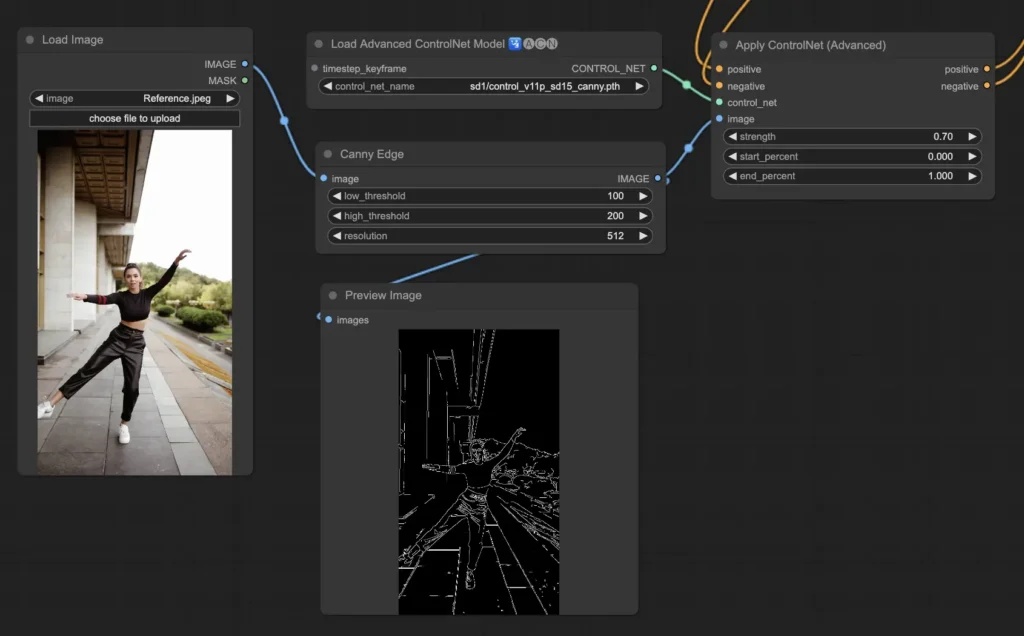

ComfyUI ControlNet Canny

The Canny model utilizes the Canny edge detection algorithm, which is a multi-stage process designed to detect a wide range of edges in an image. This model excels at preserving the structural integrity of an image while simplifying its visual components, making it ideal for stylized art or as a pre-processing step before further image manipulation. It is particularly useful when you want to emphasize the contours and shapes within an image, offering enhanced control over linework and structure in your outputs.

Preprocessor: Canny

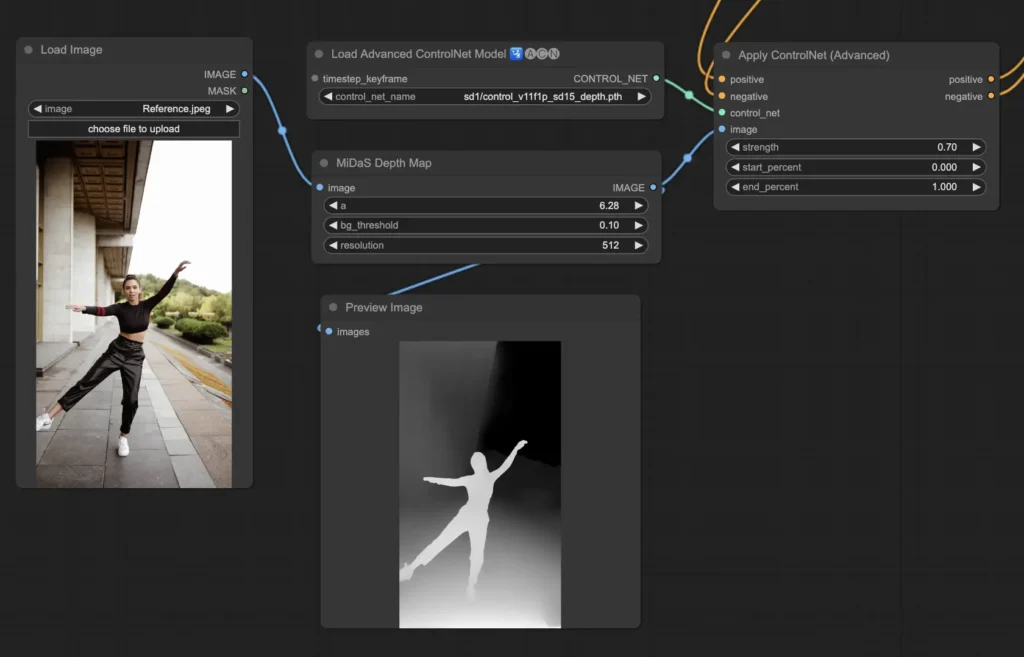

ComfyUI ControlNet Depth

The Depth models in ControlNet provide depth information from 2D images by creating grayscale depth maps that represent perceived distances. Each variant is tailored to balance detail capture and background emphasis:

- Depth Midas: Offers classic depth estimation, effectively balancing detail and background rendering for general use.

- Depth Leres: Prioritizes enhanced details while incorporating more background elements, making it suitable for intricate scenes.

- Depth Leres++: Provides an advanced level of detail for depth information, ideal for complex compositions.

- Zoe: Balances detail levels between the Midas and Leres models, offering versatility for various applications.

- Depth Anything: A newer model designed for improved depth estimation across a wide range of scenes, ensuring robust performance.

- Depth Hand Refiner: Specifically focuses on enhancing hand details in depth maps, making it crucial for scenes where hand positioning is significant.

Preprocessors: Depth_Midas, Depth_Leres, Depth_Zoe, Depth_Anything, MeshGraphormer_Hand_Refiner. This model is highly robust and can effectively work with real depth maps generated by rendering engines.

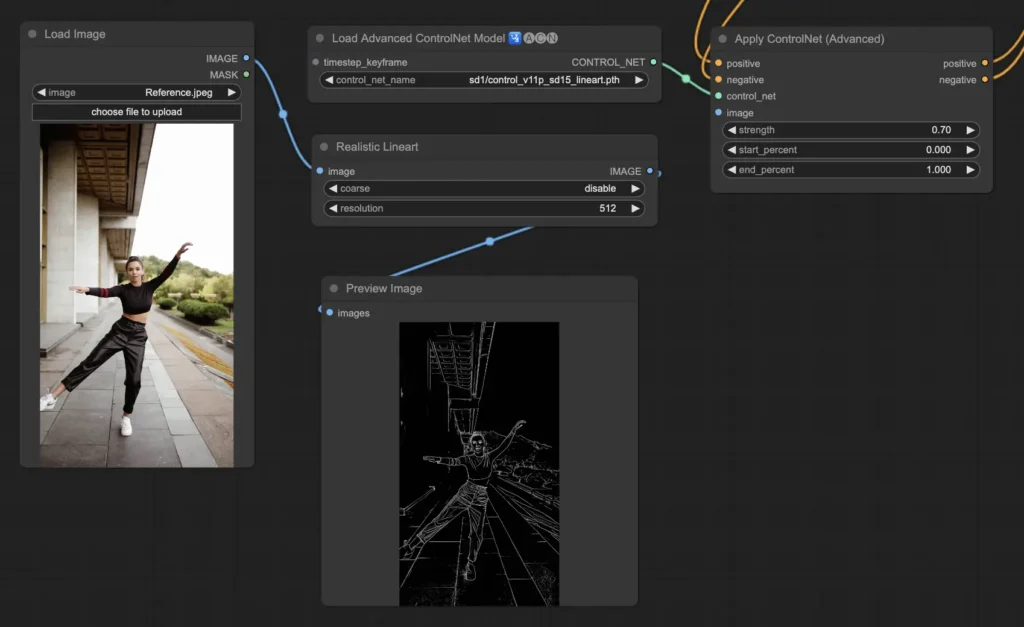

ComfyUI ControlNet Lineart

The Lineart models transform images into stylized line drawings, making them ideal for artistic interpretations or as foundational elements for further creative endeavors:

- Lineart: This standard model produces versatile stylized line drawings suitable for a wide range of artistic projects.

- Lineart Anime: Specializes in generating anime-style line drawings, known for their clean and precise lines, perfect for achieving an anime aesthetic.

- Lineart Realistic: Creates line drawings with a realistic flair, capturing intricate details and the essence of the subject, ideal for lifelike representations.

- Lineart Coarse: Generates bold line drawings with heavier lines, creating a striking effect that is particularly effective for bold artistic expressions.

The preprocessor can produce both detailed and coarse line art from images, offering flexibility in artistic styles (Lineart and Lineart_Coarse).

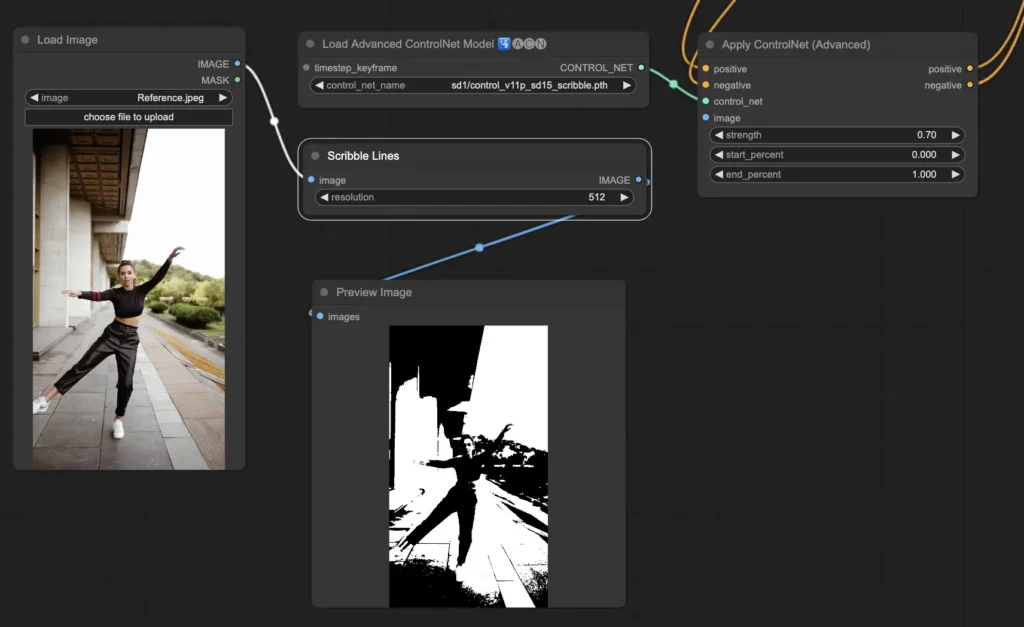

ComfyUI ControlNet Scribbles

The Scribble models are designed to give images a hand-drawn, sketch-like appearance, making them perfect for artistic restyling or as initial steps in larger design workflows:

- Scribble: Converts images into detailed artworks that mimic hand-drawn scribbles or sketches, adding a creative touch.

- Scribble HED: Utilizes Holistically-Nested Edge Detection (HED) to create outlines resembling hand-drawn sketches. It’s ideal for recoloring and restyling, adding a unique artistic flair to images.

- Scribble Pidinet: Focuses on detecting pixel differences to produce cleaner lines with less detail, making it perfect for clearer, more abstract representations. It offers crisp curves and straight edges while preserving essential elements.

- Scribble xdog: Uses the Extended Difference of Gaussian (xDoG) method for edge detection, allowing adjustable threshold settings to fine-tune the scribble effect. This versatility enables users to control the level of detail in their artwork.

Preprocessors: Scribble, Scribble_HED, Scribble_PIDI, and Scribble_XDOG.

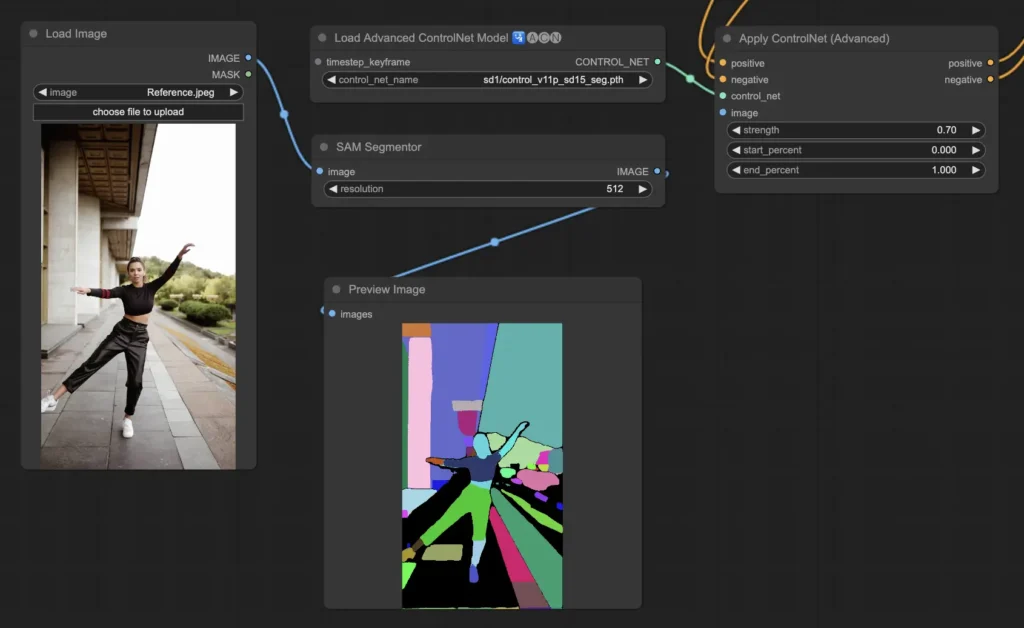

ComfyUI ControlNet Segmentation

Segmentation models categorize image pixels into distinct object classes, each represented by specific colors. This capability is invaluable for identifying and manipulating individual elements within an image, such as separating the foreground from the background or differentiating objects for detailed editing.

- Seg: Designed to differentiate objects within an image by color, translating these distinctions into clear elements in the output. For instance, it can effectively separate furniture in a room layout, making it especially valuable for projects requiring precise control over image composition and editing.

- ufade20k: Utilizes the UniFormer segmentation model trained on the ADE20K dataset, capable of distinguishing a wide range of object types with high accuracy.

- ofade20k: Employs the OneFormer segmentation model, also trained on ADE20K, offering an alternative approach to object differentiation with its unique segmentation capabilities.

- ofcoco: Leverages OneFormer segmentation trained on the COCO dataset, tailored for images containing objects categorized within the COCO dataset’s parameters, facilitating precise object identification and manipulation.

Acceptable Preprocessors: Sam, Seg_OFADE20K (Oneformer ADE20K), Seg_UFADE20K (Uniformer ADE20K), Seg_OFCOCO (Oneformer COCO), or manually created masks.

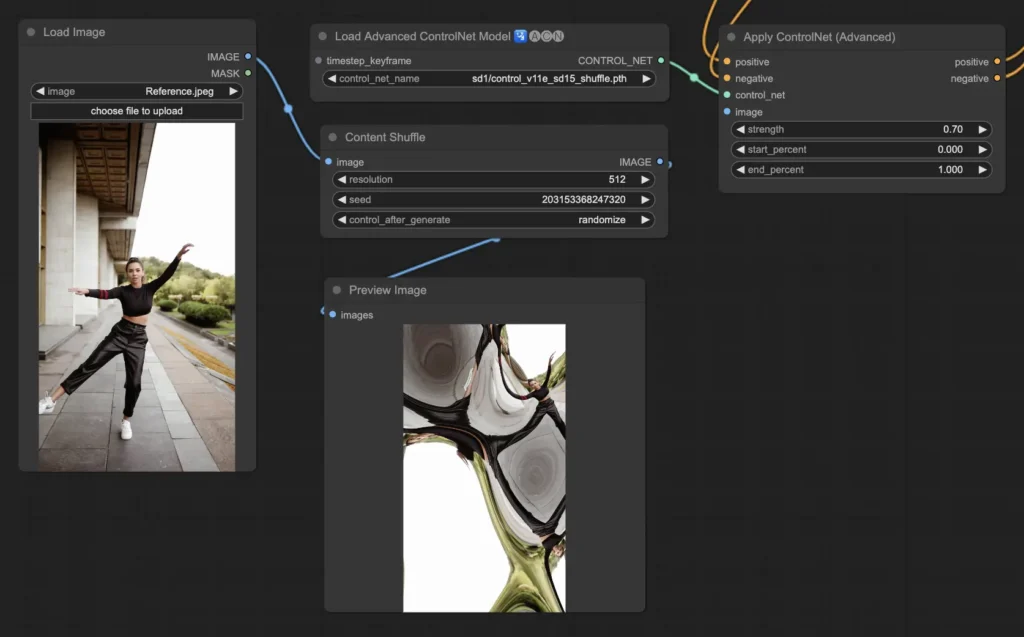

ComfyUI ControlNet Shuffle

The Shuffle model introduces a novel approach by randomizing attributes of the input image, such as color schemes or textures, while preserving its overall composition. This model is particularly effective for creative explorations, allowing users to generate variations of an image that maintain structural integrity but feature altered visual aesthetics. Each output is unique, influenced by the seed value used in the generation process.

Preprocessors: Shuffle

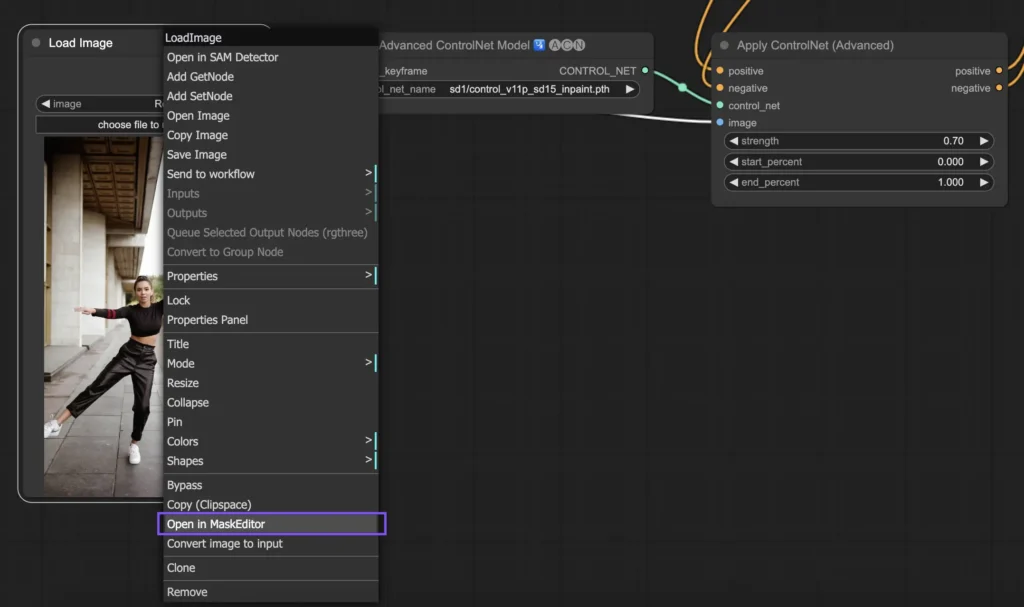

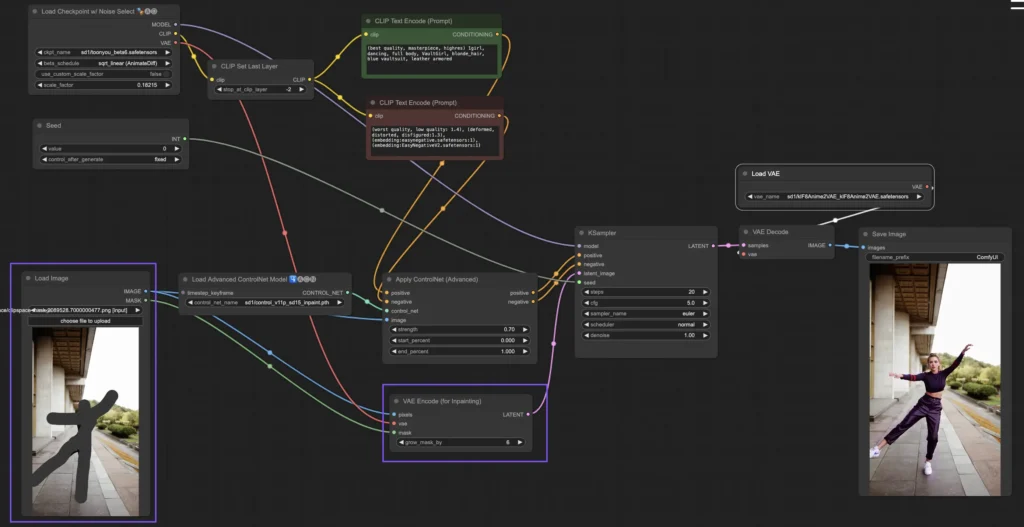

ComfyUI ControlNet Inpainting

Inpainting models within ControlNet enable precise editing in specific areas of an image, ensuring overall coherence while allowing for significant variations or corrections. To utilize ControlNet Inpainting, start by isolating the area you wish to regenerate using a mask. You can do this by right-clicking on the desired image and selecting “Open in MaskEditor” to make the necessary modifications. This feature allows for targeted adjustments, enhancing the creative flexibility of your projects.

Unlike other ControlNet implementations, Inpainting does not require a preprocessor because the modifications are applied directly to the image. However, it’s essential to send the edited image to the latent space via the KSampler. This step ensures that the diffusion model focuses specifically on regenerating the masked area while preserving the integrity of the unmasked sections. This method can be especially useful for local projects in areas like image restoration, architectural design, or regional artwork, where precise edits are needed without altering the broader composition.

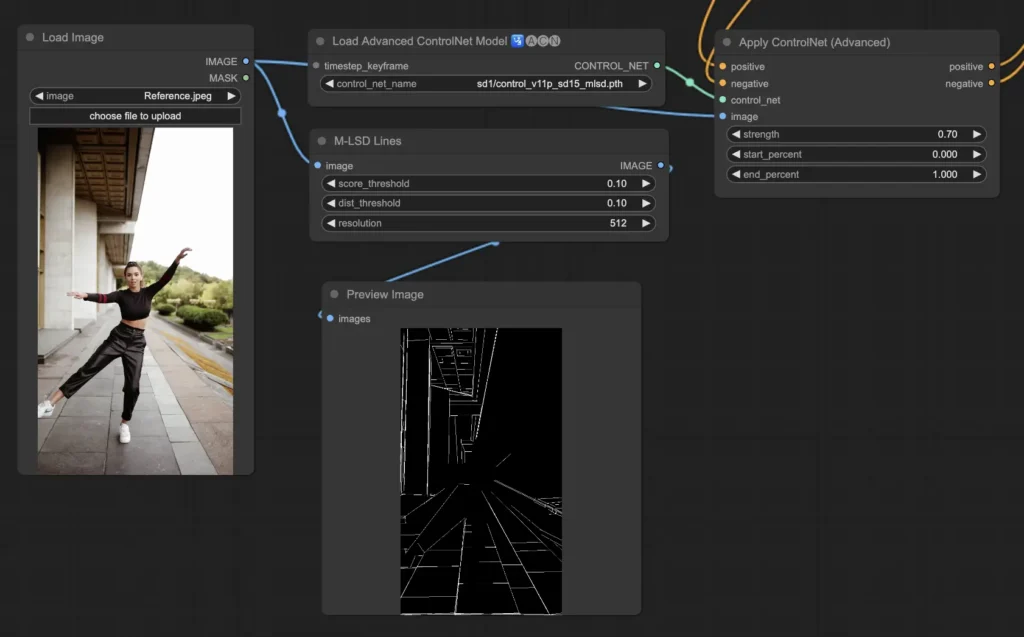

ComfyUI ControlNet MLSD

The M-LSD (Mobile Line Segment Detection) model specializes in detecting straight lines, making it perfect for images featuring architectural elements, interiors, and geometric shapes. By simplifying scenes to their structural core, it enhances creative projects centered around man-made environments.

Preprocessor: MLSD.

How to Use Multiple ComfyUI ControlNets

In ComfyUI, you can enhance your image generation by layering or chaining multiple ControlNet models, allowing for greater precision in controlling various aspects like pose, shape, style, and color.

To build your workflow, start by applying one ControlNet (e.g., OpenPose) and then feed its output into another ControlNet (e.g., Canny). This sequential application enables detailed customization, where each ControlNet contributes its specific transformations or controls. By integrating multiple ControlNets, you gain refined control over the final output, combining diverse elements guided by each model’s strengths.

Read related articles: