ComfyUI now has optimized support for Genmo’s latest model, Mochi! This integration brings state-of-the-art video generation capabilities to the ComfyUI community, even if you’re working with consumer-grade GPUs.

The weights and architecture for Mochi 1 (480P) are fully open and accessible, with Mochi 1 HD slated for release later this year.

Exploring Mochi: Key Features of the Model

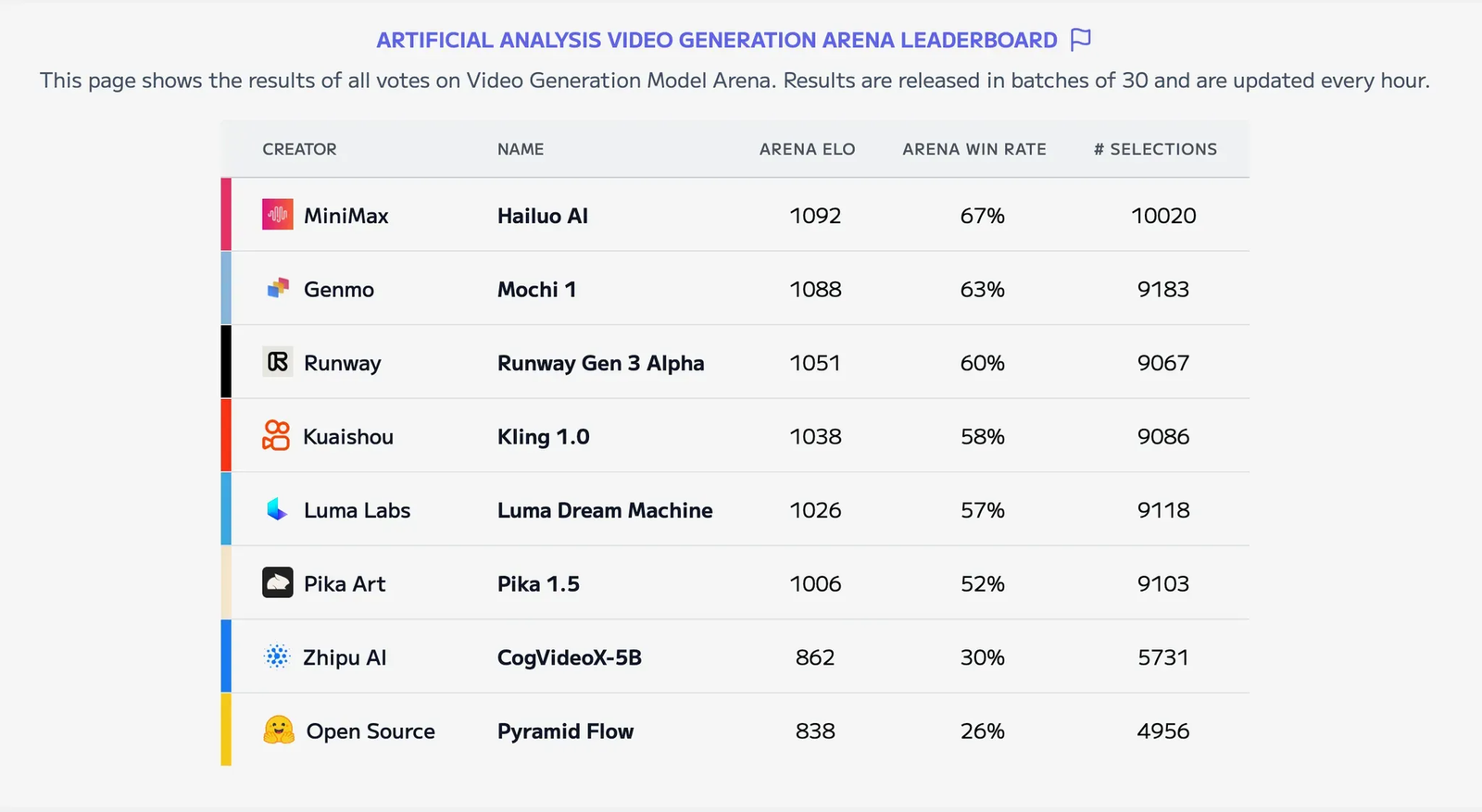

- Leading Performance

Mochi establishes a new standard in open-source video generation, offering high-quality motion fidelity. It also excels in accurately following prompt instructions. - Apache 2.0 License

Mochi is available under the Apache 2.0 license, making it an excellent option for developers and creators. This licensing allows you to use, modify, and integrate Mochi into workflows without restrictive limitations. - Optimized for Consumer GPUs

Mochi is now compatible with consumer GPUs, such as the 4090. The Mochi node in ComfyUI supports various attention backends, enabling it to run with less than 24GB of VRAM.

Try Mochi on ComfyUI

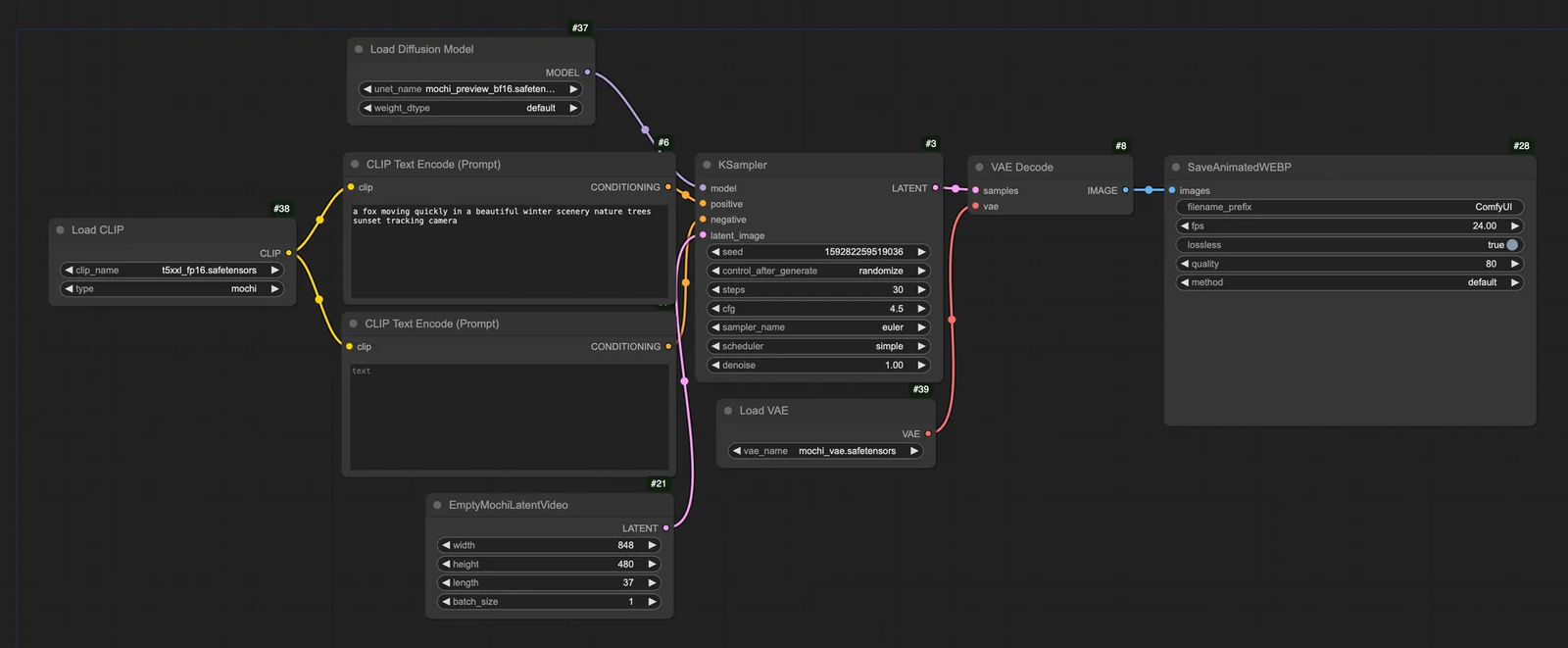

Try the following steps to run the Mochi model immediately with a standard workflow.

- Update to the latest version of ComfyUI

- Download Mochi weights(the diffusion models) into

models/diffusion_modelsfolder - Make sure a text encoder is in your

models/clipfolder - Download VAE to:

ComfyUI/models/vae - Find the example workflow here and start your generation!

Low RAM Solution

If you’re running low on RAM, we recommend the following steps while using the workflow above:

- Switch encoder: Try fp8 scaled model as an alternative to t5xxl_fp16

- Switch Diffusion model: Use the fp8_scaled Diffusion model as an alternative to the bf16 model.

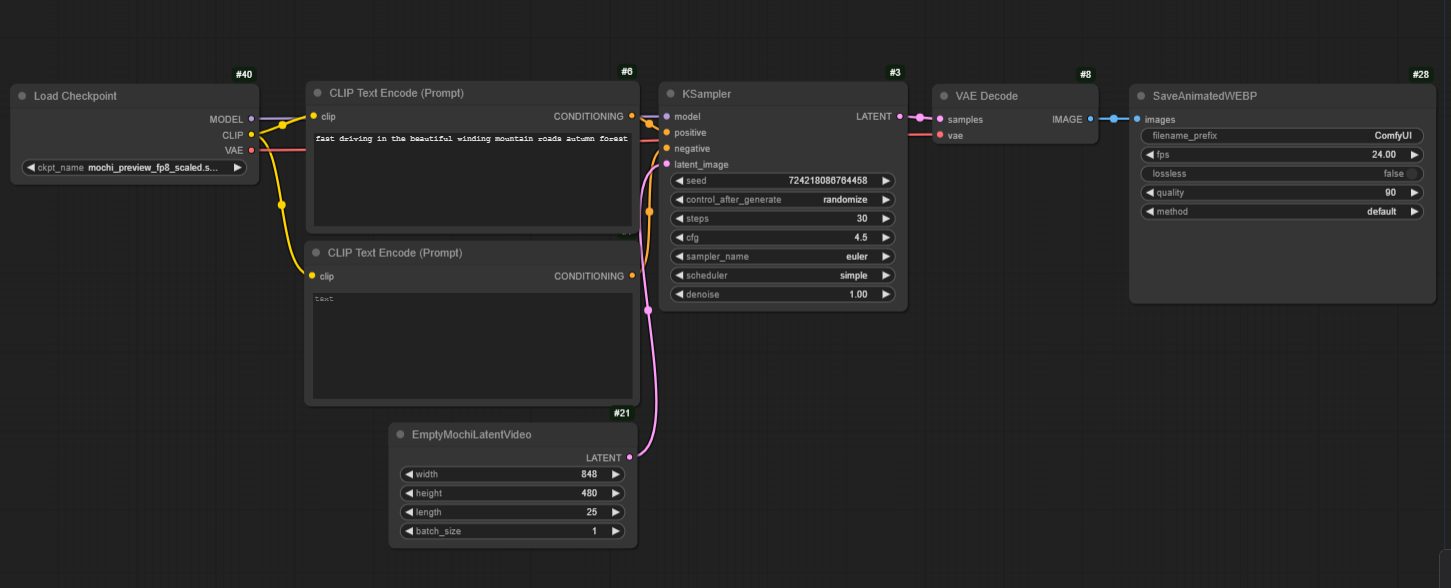

A Simplified Way to Start

ComfyUI Dev Team prepared an all-in-one packaged checkpoint to streamline the process by skipping the text encoder and VAE configuration. Follow these steps:

- Download the packaged checkpoint to the

models/checkpointfolder. - Run the simplified video generation workflow as outlined above.

Note: This checkpoint packaged the fp8_scaled Diffusion model and fp8 scaled modeltext encoder by default.

Enjoy your creation!