Welcome to the ComfyUI Workflows page, your go-to resource for creating and optimizing advanced workflows in the ComfyUI environment. Whether you’re new to node-based systems or an experienced developer, this page offers comprehensive guides, examples, and best practices to help you unlock the full potential of ComfyUI.

Dive into the world of customizable workflows, explore cutting-edge tools like LoRAs, and discover how to streamline your processes for efficient and high-quality results. Start building today and take your AI projects to the next level with ComfyUI.

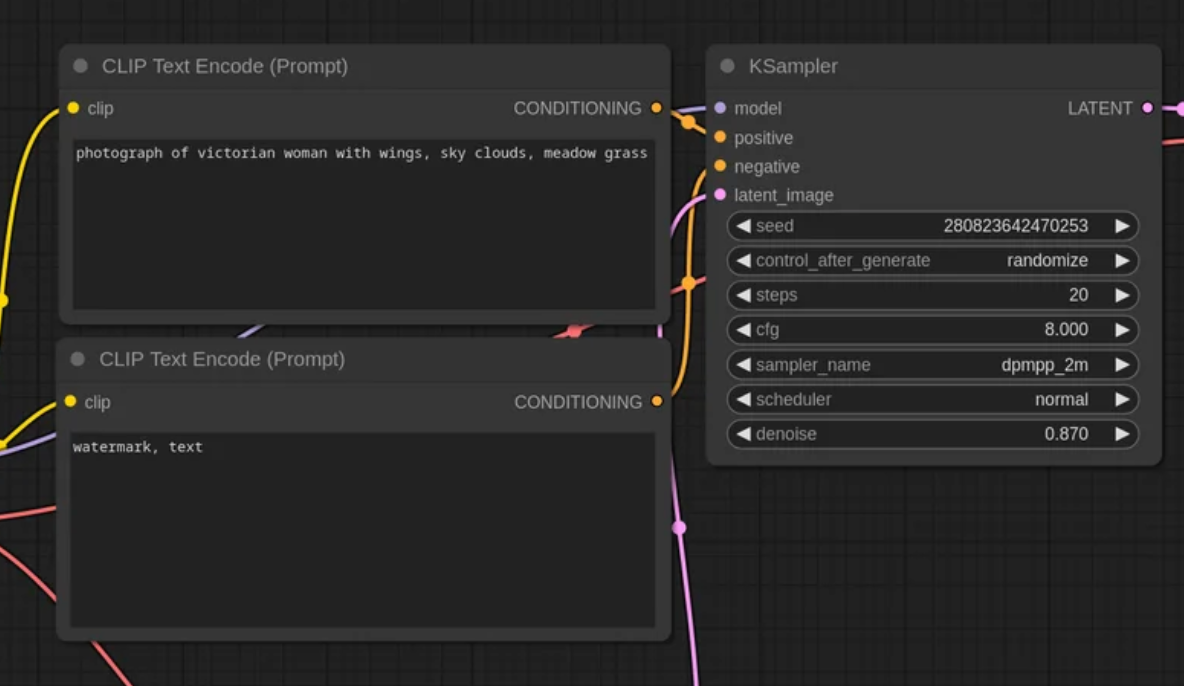

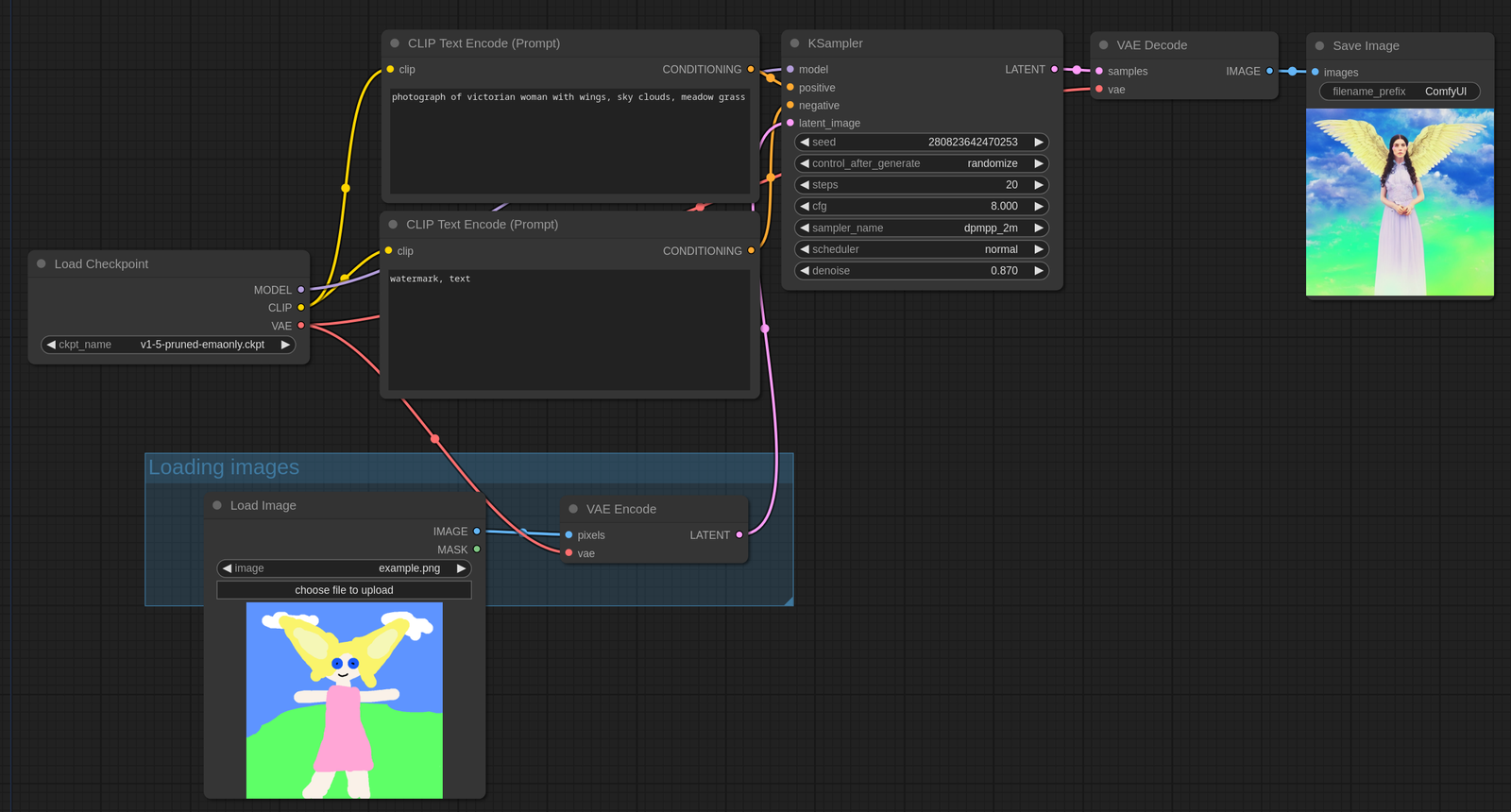

Img2img Workflow

Here are examples illustrating how to perform img2img workflow.

You can load these images in ComfyUI to access the complete workflow:

Img2Img operates by taking an input image, converting it to latent space using the VAE, and then applying sampling with a denoise value lower than 1.0. The denoise setting controls the level of noise introduced to the image; a lower denoise value results in less noise and fewer changes to the original image.

Place your input images in the input folder.

This is what a basic img2img workflow looks like—essentially the same as the default txt2img workflow, but with the denoise set to 0.87 and a loaded image passed to the sampler instead of starting with an empty canvas.

SDXL Workflow

The SDXL base checkpoint functions like any standard checkpoint in ComfyUI. However, for the best results, it’s important to set the resolution to 1024×1024 or other resolutions with an equivalent pixel count but a different aspect ratio. For example, 896×1152 or 1536×640 are suitable alternatives.

To use the base checkpoint with the refiner, you can follow this workflow.

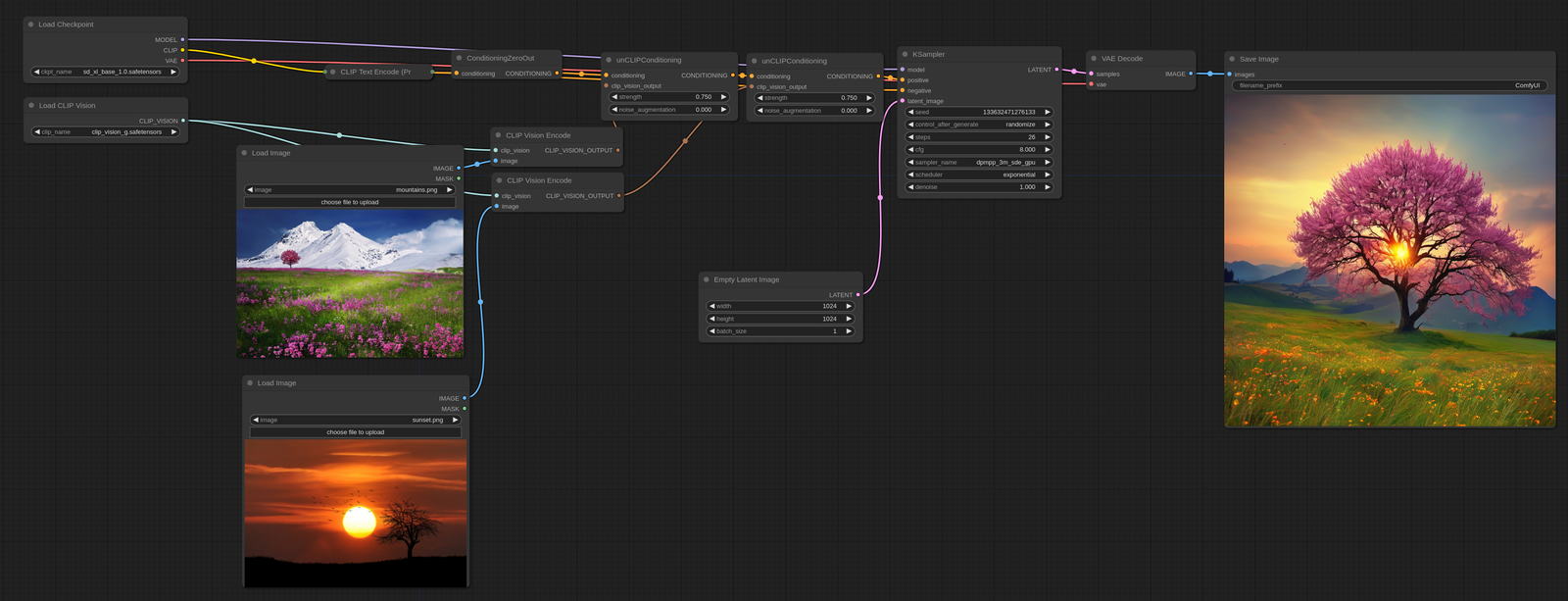

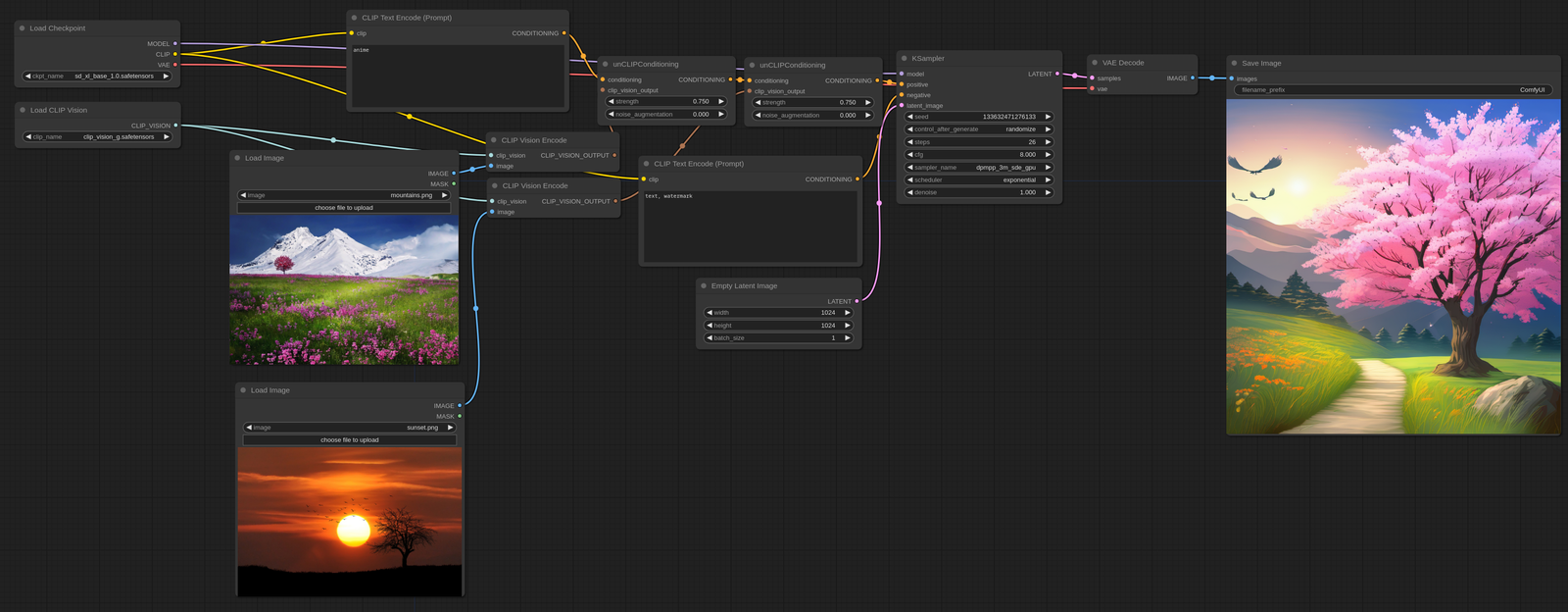

ReVision operates similarly to unCLIP but works on a more conceptual level. You can input one or more images, and ReVision will extract concepts from those images to generate new images inspired by them.

To get started, first download CLIP-G Vision and place it in your ComfyUI/models/clip_vision/ directory.

Here’s an example workflow that you can drag or load into ComfyUI. In the following example, the positive text prompt is set to zero, allowing the final output to closely follow the input image.

If you want to use text prompts you can use this example:

Note that the strength option can be adjusted to increase the influence each input image has on the final output. ReVision also supports an arbitrary number of input images, which can be used either by applying a single unCLIPConditioning or by chaining multiple unCLIPConditioning nodes together, as demonstrated in the examples above.

SD3 Workflow

The SD3 checkpoints that contain text encoders:

sd3_medium_incl_clips.safetensors (5.5GB)

and

sd3_medium_incl_clips_t5xxlfp8.safetensors (10.1GB)

can be used like any regular checkpoint in ComfyUI.

The difference between both these checkpoints is that the first contains only 2 text encoders: CLIP-L and CLIP-G while the other one contains 3: CLIP-L, CLIP-G and T5XXL. Make sure to put either sd3_medium_incl_clips.safetensors or sd3_medium_incl_clips_t5xxlfp8.safetensors in your ComfyUI/models/checkpoints/ directory.

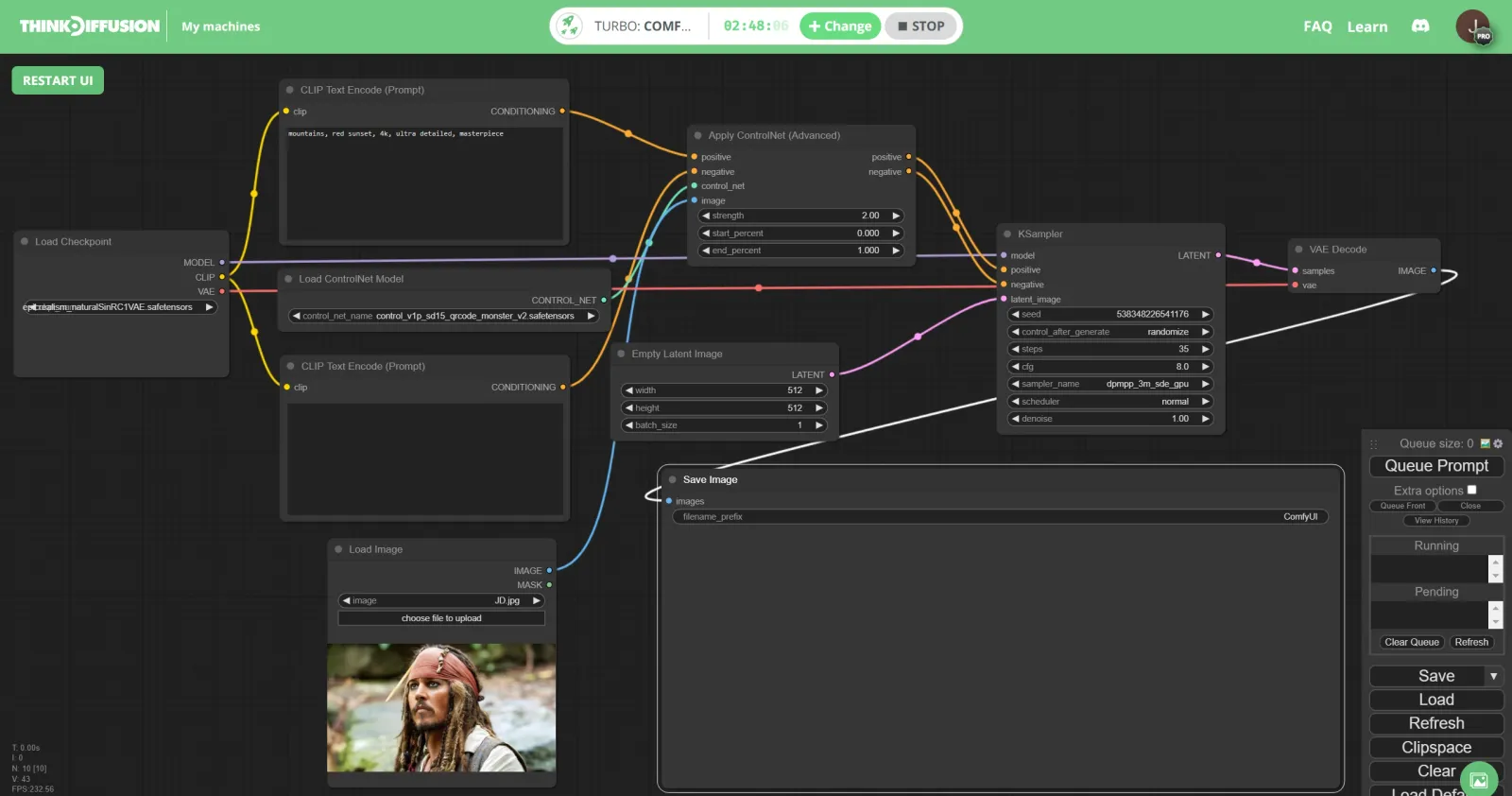

Hidden Faces Workflow

This ComfyUI workflow enables the creation of hidden faces or text within images, unlocking a new level of creativity. With this technique, you can embed subtle details into your visuals, making them more intriguing and unique.

JSON for the workflow:

{

"last_node_id": 14,

"last_link_id": 19,

"nodes": [

{

"id": 4,

"type": "CLIPTextEncode",

"pos": [

489,

117

],

"size": {

"0": 400,

"1": 200

},

"flags": {},

"order": 5,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 3,

"slot_index": 0

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

1

],

"shape": 3

}

],

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

"mountains, red sunset, 4k, ultra detailed, masterpiece"

]

},

{

"id": 8,

"type": "EmptyLatentImage",

"pos": [

946,

481

],

"size": {

"0": 315,

"1": 106

},

"flags": {},

"order": 0,

"mode": 0,

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [],

"shape": 3

}

],

"properties": {

"Node name for S&R": "EmptyLatentImage"

},

"widgets_values": [

512,

512,

1

]

},

{

"id": 5,

"type": "CLIPTextEncode",

"pos": [

490,

498

],

"size": {

"0": 400,

"1": 200

},

"flags": {},

"order": 6,

"mode": 0,

"inputs": [

{

"name": "clip",

"type": "CLIP",

"link": 4,

"slot_index": 0

}

],

"outputs": [

{

"name": "CONDITIONING",

"type": "CONDITIONING",

"links": [

2

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "CLIPTextEncode"

},

"widgets_values": [

""

]

},

{

"id": 2,

"type": "ControlNetLoader",

"pos": [

487,

378

],

"size": {

"0": 459.2667236328125,

"1": 58

},

"flags": {},

"order": 1,

"mode": 0,

"outputs": [

{

"name": "CONTROL_NET",

"type": "CONTROL_NET",

"links": [

8

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "ControlNetLoader"

},

"widgets_values": [

"control_v1p_sd15_qrcode_monster_v2.safetensors"

]

},

{

"id": 6,

"type": "CheckpointLoaderSimple",

"pos": [

123,

326

],

"size": {

"0": 296.5630187988281,

"1": 172.85345458984375

},

"flags": {},

"order": 2,

"mode": 0,

"outputs": [

{

"name": "MODEL",

"type": "MODEL",

"links": [

15

],

"shape": 3,

"slot_index": 0

},

{

"name": "CLIP",

"type": "CLIP",

"links": [

3,

4

],

"shape": 3

},

{

"name": "VAE",

"type": "VAE",

"links": [

12

],

"shape": 3,

"slot_index": 2

}

],

"properties": {

"Node name for S&R": "CheckpointLoaderSimple"

},

"widgets_values": [

"epicrealism_naturalSinRC1VAE.safetensors"

]

},

{

"id": 9,

"type": "LoadImage",

"pos": [

556,

751

],

"size": {

"0": 315,

"1": 314

},

"flags": {},

"order": 3,

"mode": 0,

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

9

],

"shape": 3,

"slot_index": 0

},

{

"name": "MASK",

"type": "MASK",

"links": null,

"shape": 3

}

],

"properties": {

"Node name for S&R": "LoadImage"

},

"widgets_values": [

"JD.jpg",

"image"

]

},

{

"id": 10,

"type": "VAEDecode",

"pos": [

1881,

337

],

"size": {

"0": 210,

"1": 46

},

"flags": {},

"order": 9,

"mode": 0,

"inputs": [

{

"name": "samples",

"type": "LATENT",

"link": 18

},

{

"name": "vae",

"type": "VAE",

"link": 12,

"slot_index": 1

}

],

"outputs": [

{

"name": "IMAGE",

"type": "IMAGE",

"links": [

14

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "VAEDecode"

}

},

{

"id": 12,

"type": "SaveImage",

"pos": [

1017,

655

],

"size": {

"0": 935.2297973632812,

"1": 410.964599609375

},

"flags": {},

"order": 10,

"mode": 0,

"inputs": [

{

"name": "images",

"type": "IMAGE",

"link": 14

}

],

"properties": {},

"widgets_values": [

"ComfyUI"

]

},

{

"id": 3,

"type": "ControlNetApplyAdvanced",

"pos": [

1052,

180

],

"size": {

"0": 315,

"1": 166

},

"flags": {},

"order": 7,

"mode": 0,

"inputs": [

{

"name": "positive",

"type": "CONDITIONING",

"link": 1,

"slot_index": 0

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 2

},

{

"name": "control_net",

"type": "CONTROL_NET",

"link": 8

},

{

"name": "image",

"type": "IMAGE",

"link": 9

}

],

"outputs": [

{

"name": "positive",

"type": "CONDITIONING",

"links": [

16

],

"shape": 3,

"slot_index": 0

},

{

"name": "negative",

"type": "CONDITIONING",

"links": [

17

],

"shape": 3,

"slot_index": 1

}

],

"properties": {

"Node name for S&R": "ControlNetApplyAdvanced"

},

"widgets_values": [

2,

0,

1

]

},

{

"id": 13,

"type": "KSamplerAdvanced",

"pos": [

1466,

138

],

"size": {

"0": 315,

"1": 334

},

"flags": {},

"order": 8,

"mode": 0,

"inputs": [

{

"name": "model",

"type": "MODEL",

"link": 15,

"slot_index": 0

},

{

"name": "positive",

"type": "CONDITIONING",

"link": 16

},

{

"name": "negative",

"type": "CONDITIONING",

"link": 17

},

{

"name": "latent_image",

"type": "LATENT",

"link": 19,

"slot_index": 3

}

],

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

18

],

"shape": 3,

"slot_index": 0

}

],

"properties": {

"Node name for S&R": "KSamplerAdvanced"

},

"widgets_values": [

"enable",

718718538254054,

"randomize",

20,

8,

"euler",

"normal",

0,

10000,

"disable"

]

},

{

"id": 14,

"type": "EmptyLatentImage",

"pos": [

1041,

3

],

"size": {

"0": 315,

"1": 106

},

"flags": {},

"order": 4,

"mode": 0,

"outputs": [

{

"name": "LATENT",

"type": "LATENT",

"links": [

19

],

"shape": 3

}

],

"properties": {

"Node name for S&R": "EmptyLatentImage"

},

"widgets_values": [

512,

512,

1

]

}

],

"links": [

[

1,

4,

0,

3,

0,

"CONDITIONING"

],

[

2,

5,

0,

3,

1,

"CONDITIONING"

],

[

3,

6,

1,

4,

0,

"CLIP"

],

[

4,

6,

1,

5,

0,

"CLIP"

],

[

8,

2,

0,

3,

2,

"CONTROL_NET"

],

[

9,

9,

0,

3,

3,

"IMAGE"

],

[

12,

6,

2,

10,

1,

"VAE"

],

[

14,

10,

0,

12,

0,

"IMAGE"

],

[

15,

6,

0,

13,

0,

"MODEL"

],

[

16,

3,

0,

13,

1,

"CONDITIONING"

],

[

17,

3,

1,

13,

2,

"CONDITIONING"

],

[

18,

13,

0,

10,

0,

"LATENT"

],

[

19,

14,

0,

13,

3,

"LATENT"

]

],

"groups": [],

"config": {},

"extra": {},

"version": 0.4

}